Large Hadron Collider (LHC) Ultralight Data Analysis Tools

Website

http://ultralight.caltech.edu/web-site/igrid/Contact

Harvey Newman, Caltech & CERNCollaborators

Brazil: Universidade do Estado do Rio de Janeiro; Universidade Estadual Paulista; Universidade de São PauloSwitzerland: CERN

South Korea: Korea Advanced Institute of Science and Technology; Kyungpook National University

United Kingdom: University of Manchester

United States: Department of Physics and Netlab, Caltech; Stanford Linear Accelerator Center (SLAC); Fermi National Accelerator Laboratory (Fermi); University of Florida; University of Michigan; Vanderbilt University; Cisco Systems Inc.

Description

The Large Hadron Collider (LHC) is a particle accelerator and collider under construction at CERN, and is expected to become the world’s largest and highest energy particle accelerator in 2008, when commissioning at 7 TeV is completed. The LHC is being funded and built in collaboration with over 2,000 physicists from 34 countries, universities and laboratories. When in operation, about 7,000 physicists from 80 countries will have access to the LHC, the largest national contingent being from the US. When it begins colliding particles, it will provide proton collisions for four large physics experiments that seek to expand our understanding of the universe (see http://www.isgtw.org/?pid=1000416).

- The ATLAS and CMS collaborations designed multi-purpose detectors; these will hunt for the Higgs boson, traces of supersymmetry and evidence of extra dimensions, among other things.

- ALICE aims to study the quark-gluon plasma, a new phase of matter expected at extreme energy densities.

- LHCb will examine beauty quark physics.

Analysis tools for use on advanced networks will enable physicists to control global grid resources when analyzing major high-energy physics events. Components of this "Grid Analysis Environment" are being developed by such projects as UltraLight, FAST, PPDG, GriPhyN and iVDGL.

For the SC'06 Bandwidth Challenge, a team led by Caltech set new records for sustained data transfer between storage systems. Their demonstration of “High Speed Data Gathering, Distribution and Analysis for Physics Discoveries at the Large Hadron Collider” achieved a peak throughput of 17.77 Gbps between clusters of servers at the show floor and at Caltech. Following the rules set for the SC06 Bandwidth Challenge, the team used a single 10-Gbps link provided by National LambdaRail that carried data in both directions. Sustained throughput throughout the night prior to the bandwidth challenge exceeded 16 Gbps (or two Gigabytes per second) using just 10 pairs of small servers sending data at 9 Gbps to Caltech from Tampa, and eight pairs of servers sending 7 Gbps of data in the reverse direction.

First prize for the SC|05 Bandwidth Challenge went to the team from Caltech, Fermi and SLAC for their entry “Distributed TeraByte Particle Physics Data Sample Analysis,” which was measured at a peak of 131.57 Gbps of IP traffic. This entry demonstrated high-speed transfers of particle physics data between host labs and collaborating institutes in the US and worldwide. Using state-of-the-art WAN infrastructure and Grid Web Services based on the LHC Tiered Architecture, they showed real-time particle event analysis requiring transfers of Terabyte-scale datasets.

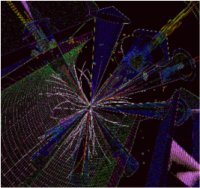

Collisions at CERN's Large Hadron Collider, when it begins operating in 2007, may produce events like this Black Hole decay, as seen inside the CMS detector. Black Holes, if they are created, will quickly evaporate, producing a spew of energetic particles that shoot out from the collision point. [Image courtesy of Ianna Osborne and Julian Bunn, CERN]